In today’s guest post, Bruce Tulloch, CEO and Managing Director of BitScope Designs , discusses the uses of cluster computing with the Raspberry Pi, and the recent pilot of the Los Alamos National Laboratory 3000-Pi cluster built with the BitScope Blade .

High-performance computing and Raspberry Pi are not normally uttered in the same breath, but Los Alamos National Laboratory is building a Raspberry Pi cluster with 3000 cores as a pilot before scaling up to 40 000 cores or more next year.

That’s amazing, but why?

Traditional Raspberry Pi clusters

Like most people, we love a good cluster! People have been building them with Raspberry Pi since the beginning, because it’s inexpensive, educational, and fun . They’ve been built with the original Pi, Pi 2, Pi 3, and even the Pi Zero, but none of these clusters have proven to be particularly practical.

That’s not stopped them being useful though! I saw quite a few Raspberry Pi clusters at the conference last week.

One tiny one that caught my eye was from the people at openio.io , who used a small Raspberry Pi Zero W cluster to demonstrate their scalable software-defined object storage platform , which on big machines is used to manage petabytes of data, but which is so lightweight that it runs just fine on this:

There was another appealing example at the ARM booth, where the Berkeley Labs’ singularity container platform was demonstrated running very effectively on a small cluster built with Raspberry Pi 3s.

My show favourite was from the Edinburgh Parallel Computing Center (EPCC): Nick Brown used a cluster of Pi 3s to explain supercomputers to kids with an engaging interactive application. The idea was that visitors to the stand design an aircraft wing, simulate it across the cluster, and work out whether an aircraft that uses the new wing could fly from Edinburgh to New York on a full tank of fuel. Mine made it, fortunately!

Next-generation Raspberry Pi clusters

We’ve been building small-scale industrial-strength Raspberry Pi clusters for a while now with BitScope Blade .

When Los Alamos National Laboratory approached us via HPC provider SICORP with a request to build a cluster comprising many thousands of nodes, we considered all the options very carefully. It needed to be dense, reliable, low-power, and easy to configure and to build. It did not need to “do science”, but it did need to work in almost every other way as a full-scale HPC cluster would.

I’ll read and respond to your thoughts in the comments below this post too.

Editor’s note:

Here is a photo of Bruce wearing a jetpack. Cool, right?!

16 comments

What’s wrong with using the switch and ethernet fabric as the “bus” on the existing hardware?

That’s a good idea. I think the time is right.

The EPCC Raspberry Pi Cluster is called Wee Archie () and it (like the Los Alamos one we built) is a “model”, albeit for a somewhat different purpose. In their case it’s representative of Archer (http://www.archer.ac.uk/) a world-class supercomputer located and run in the UK the National Supercomputing Service. Nick Brown (https://www.epcc.ed.ac.uk/about/staff/dr-nick-brown) is the guy behind the demo I saw at SC17. Drop him a line!

One of the pending issues with exascale computing is that it is inefficient to checkpoint a computation running on so many cores across so many boxes. At the same time, the probability that all nodes function faultlessly for the duration of the computation decreases exponentially as more nodes are added.

Effectively utilizing distributed memory parallel systems has been compared to herding chickens. When contemplating flocks so large that it takes megawatts to feed them, it may be better to practice by herding cockroaches. This isn’t about performance tuning Fortran codes, but how to manage hardware faults in a massively distributed parallel computation. As mentioned in the press release, we don’t even know how to boot an exascale machine: By the time the last node boots, multiple other nodes have already crashed. In my opinion modelling these exascale difficulties with a massive cluster of Raspberry Pi computers is feasible. For example, dumping 1GB of RAM over the Pi’s 100Mbit networking is a similar data to bandwidth ratio as dumping 1TB of RAM over a 100Gbit interconnect.

Spot on Eric. The issue is one of scale, booting, running the machines, getting the data in and out and check-pointing to avoid losing massive amounts of computational work.

Some interesting things I learned from this project…

One normally thinks of error rates of the order of 10^-18 as being pretty good, but at this scale one can run into them within the duration of a single shot on a big machine. At exascale this will be worse. The word the HPC community uses for this is “resilience”; the machines need to be able to do the science in a reliable and verifiable way despite these “real world” problems intervening in the operation of the underlying cluster.

They do a lot of “synchronous science” at massive scale so the need for check-points is unavoidable and Los Alamos is located at quite a high altitude (about 7,300 feet) so the machines are subject to a higher levels of cosmic radiation. This means they encounter higher rates of “analogue errors” which can cause computation errors and random node crashes.

All these sorts of problems can be modelled, tested and understood using the Raspberry Pi Cluster at much lower cost and lower power than on big machines. Having root access to a 40,000 core cluster for extended periods of time is like a dream come true for the guys who’s job is to solve these problems.

Not LFS but not Raspbian either (except for initial testing). They will eventually published more to explain what they’re doing but suffice to say it’s a very lean software stack which is aimed to make it easy for them to simulate the operation of big clusters on this “little” one.

When running a cluster of 750 nodes (as Los Alamos are doing), managing and updating images on all 750 SD card is, well, a nightmare.

If your cluster is smaller it may not be a problem (indeed we often do just this for small Blade Racks of 20 or 40 nodes).

However, the other issue is robustness.

SD cards tend to wear out (how fast depends on how you use them). PXE (net) boot nodes do not wear out. Local storage may also not be necessary (if you use an NFS or NBD served file system via the LAN) but the bandwidth of accessing the (remote) storage may be a problem (if they all the nodes jump on the LAN at once depending on your network fabric and/or NFS/NBD server bandwidth).

The other option are USB sticks (plugged into the USB ports of the Raspberry Pi). They are (usually) faster and (can be) more reliable than SD cards and you can boot from them too!

All that said, there is no problem with SD cards used within their limitations in Raspberry Pi Clusters.

You guys need to start making a computer “erector set”. As a kid, I received an erector set when I was 7-8 years old for Christmas. I made everything one could think of. I later became a Mechanical Engineer. I designed parts for GE Gas Turbines, and when you switch on your lights, I have a direct connection to powering RPis all over the world.

You have most of the fundamental parts right now. You need a bus, something like the CM DDR3 bus. If the RPi 3B or whenever the RPi 4 comes out, had an adaptor or pinouts that connected to that bus, Clustering would be easy. I could envision four quad processor CMs, a graphics processor/Bitcoin miner on a CM, a CM with SSD, etc. A computer erector set…

Is there a short video presentation available that discusses the Los Alamos Pi cluster, how it was constructed, what it will be used for and why this solution was chosen over others?

Also, given the interest in OctoPi and other Pi clusters, could there be a section devoted to parallel processing in the Raspberry Pi Forum?

Is the airwing demo free available?

I’m glad I left their high performance computing department now. This is madness. The Fortran code bad so prevalent at the labs is not going to run the same on the ARM architecture when the super computers the code is to run on will be used on Intel architecture machines. This project is going to give the interns a playing field to learn what they should have learned in college.

I make 120 Raspberry Pi clusters for 3D scanning. Use pure UDP multicasting to control them all using a single network packet transmission. Works really well:-)

That’s very similar with what we want with a new grass roots project. But instead of a cluster physical near located, We are thinking of a ‘collective’ (kind of Borg, but then nice…), for doing Three.js GPU 3D rendering. I’ve got a prototype running on If you Bing or Google on sustasphere, you will find the corresponding GitHub (not completely up to date however). The current prototype renders (obviously) in your browser. With the collective, your browser-calls will be routed to (hopefully) thousands of Raspberry’s; each crunching real-time a part of the 3D rendering. In ‘my head’, I’m thinking about Open Suze stacked with Express.js.

For the energy-supply of each node, we thank the wind and an archemedian screw, with an hydraulic head, with a simple bicycle dynamo…

Nice, but why? We would like to honor an echo from the past (The Port Huron Statement); introduce a virtual sphere of dignity. Giving people the opportunity to express their emotions; again defining the meaning of dignity. Imagine Mozart performing his Nozze di Figaro (for me a perfect example of bringing art to the people and sharing thoughts about morality); and being able to actualy be there, move around, ‘count the nostrils’ and maybe even ‘get physical’.

Yep, you’ll need some GPU-collective for that.

Based on your expierence, could you advise us on our road ahead? Help use make sound decisions?

> recent pilot of the Los Alamos National Laboratory 3000-Pi cluster

It should read 750-Pi cluster, 5 blades of 150 Pis each, with 3000 cores total (4 cores each per CPU)

Ok, I’m a nuby on raspberry pi 3’s. But I was wondering if they used LFS with the bitscope blade cluster? …and if so, how did it perform?

Why is it “important to avoid using the Micro SD cards” ?

I have an application in mind for a pi cluster, for which I will need local storage. If I can’t use the MicroSD card, then what?

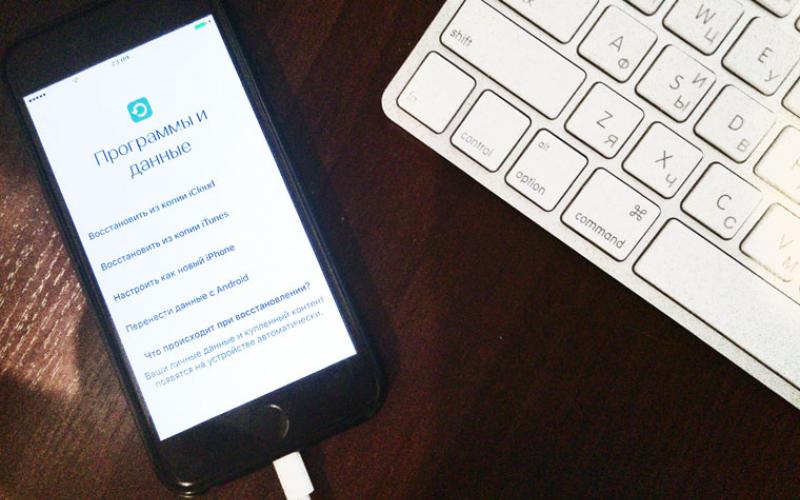

Вполне возможно, что это самый дешёвый и доступный кластер, построенный в домашних условиях.

В данный момент он считает задачки seti@home.

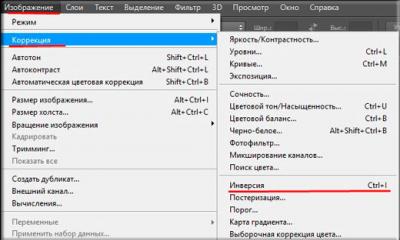

Сборка

Сборка не составляет особого труда - вот список материалов для повторения:

- 4 платы OrangePi PC (One тоже подойдет) с кабелями питания

- 16 стоек для печатных плат для крепления между собой

- 4 стойки (коротких) для крепления на подставку или использования в качестве ножек

- 2 куска оргстекла (верхняя и нижняя крышка)

- Вентилятор 92 мм

- 4 уголка для крепления вентилятора

- 100Mbs Ethernet HUB, желательно с питанием либо 5, либо 12 вольт

- Патчкорды для соединения Ethernet в необходимом количестве (кстати, поскольку сеть все равно 100 МБит, можно использовать 4х-жильную телефонную лапшу и немного сэкономить на кабеле)

- Источник питания (об этом позже)

- Для связи с внешним миром - дешёвый USB WiFi

Скручиваем вместе четыре платы, крепим верхнюю и нижнюю крышки, ставим вентилятор с использованием уголков. На верхнюю крышку водружаем хаб и соединяем наш всё вместе через Ethernet.

А вот так “изделие” выглядит “с тыльной стороны”.

К сожалению, синей изоленты не было - так что хаб крепим резиночками.

Питание

Каждая из OPI потребляет не меньше ампера (производитель рекомендует источник не меньше 1.5…2A). Вентилятор требует 12 вольт, хаб тоже, хотя бывают и 5-вольтовые модели.

Так что потребуется хороший источник питания с двумя напряжениями.

Старый компьютерный вполне подойдет, но лучше использовать современный безвентиляторный импульсный источник, например от MeanWell.

Я, собственно, так и поступил, упаковав его в корпус от винтажного блока питания и выведя наружу обычный молекс-разъем (как на компьютере).

Для “раздачи” 5ти вольт будем использовать модифицированный USB-хаб из дешевых. Для этого можно либо высверлить чип, либо просто отрезать ножки данных, оставив только цепи питания и землю. Я остановился на втором способе, правда внутри проложил еще “толстые” соединения на линии 5В. Ну и повесим ответный molex для соединения с БП. Получается примерно так:

А вот вся конструкция в сборе:

Система

Вообще, это просто “маленькая локальная сеть из 4х компьютеров”.

В качестве базовой системы - обычный Debian, о котором уже много говорили .

Сеть

Самый верхний узел - clunode0, он умеет соединяться по WiFi с внешней сетью, при этом раздает “интернет” на машины clunode1, clunode2, clunode3. Там же работает сервер NFS для общего хранилища и dnsmasq для раздачи DHCP адресов вида 10.x.x.x.

На clunode0 в /etc/network/interfaces примерно такая запись:

| 1

2 3 4 5 6 7 8 9 10 11 12 13 | auto wlan0

allow-hotplug wlan0 iface wlan0 inet dhcp wpa-scan-ssid 1 wpa-ap-scan 1 wpa-key-mgmt WPA-PSK wpa-proto RSN WPA wpa-pairwise CCMP TKIP wpa-group CCMP TKIP wpa-ssid "MyWiFi" wpa-psk "MyWiFiPassword" post-up /usr/local/bin/masquerade.sh eth0 wlan0 iface default inet dhcp |

Хотя, вроде как там ситуация переломилась и бинарник можно сгрузить с сайта. Не проверял - проще было собрать самому.

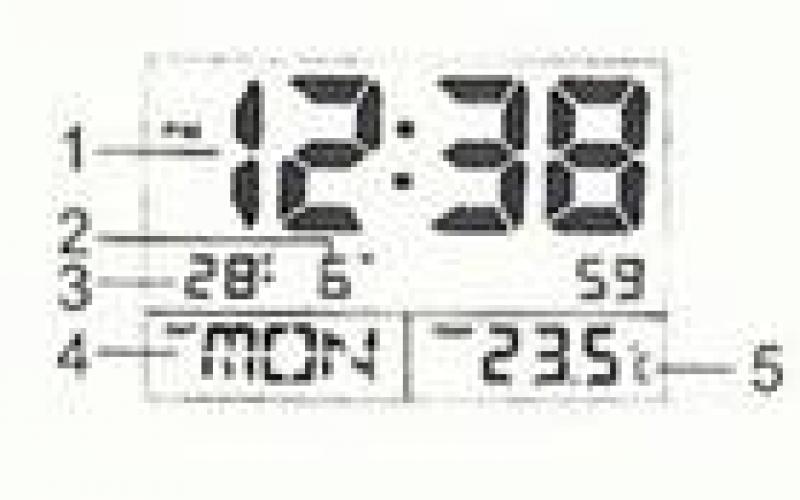

Еще можно установить и настроить консольную утилиту boinctui . Выглядит всё вполне пристойно (animated GIF):

Перспективы

Можно развить идею - вот навскидку несколько идеек:

- Первая плата (clunode0) - load balancer, сlunode2,3 - веб-сервера или приложение, clunode4 - БД ==> микродатацентр:)

- Hadoop (и такие случаи уже есть, народ строит кластеры на Raspberry)

- Proxmox кластер, правда я не уверен, что все запчасти доступны для ARM

- Майнер cryptocurrency, если конечно подберете криптовалюту, которую всё еще выгодно майнить на процессоре и выгодно майнить вообще.

Спасибо, что дочитали до конца.

Плата Cluster HAT является решением проблемы построения кластерных вычислений. Распределенные вычисления сложны и этот крошечный аппаратный комплект является одним из решением данной проблемы.

Не смотря на то, что создание не такое и простое, это представляет собой один из самых впечатляющих проектов Raspberry Pi.

Почему Cluster HAT?

Плата Cluster HAT (Hardware Attached on Top) взаимодействует с (контроллером) Raspberry Pi A+ / B+ / 2 / 3 / 4 и четырьмя платами Raspberry Pi Zero. Она настроена на использование режима USB Gadget. Кроме того, это идеальный инструмент для обучения, тестирования или моделирования небольших кластеров.

Cluster HAT использует гибкость Raspberry Pi, позволяя программистам экспериментировать с кластерными вычислениями.

Важно отметить, что HAT не поставляется с платой Raspberry Pi или Pi Zero. Обе платы нужно будет приобретать отдельно. Производитель Pimoroni предоставляет инструкции по сборке и управлению на своей странице продукта . Также компания утверждает, что существует 3 способа настройки платы HAT.

Технические характеристики Cluster HAT

- HAT может быть использована с любым из модулей Pi Zero 1.2, Pi Zero 1.3 и Pi Zero W.

- Режим USB Gadget: Ethernet и последовательная консоль.

- Встроенный 4-портовый хаб USB 2.0.

- Питание Raspberry Pi Zeros осуществляет через контроллер Pi GPIO (USB дополнительно).

- Питание Raspberry Pi Zero контролируется через контроллер Pi GPIO (I2C).

- Разъем для контроллер последовательной консоли (FTDI Basic).

- Контроллер Pi может быть перезагружен без прерывания питания для Pi Zeros (восстановление сети при загрузке).

Комплект включает в себя:

- Комплект крепления HAT (стойки и винты)

- Короткий USB провод (цвет может отличаться)

В заключении

Плата Cluster HAT v2.3 сейчас доступна для покупки и хотя ее пока нет в наличии у

One popular use of Raspberry Pi computers is building clusters. Raspberry Pies are small and inexpensive so it"s easier to use them to build a cluster than it would be with PCs. A cluster of Raspberry Pies would have to be quite large to compete with a single PC; you"d probably need about 20 Pies to produce a cluster with as much computing power as a PC. Although a Pi cluster may not be that powerful, it"s a great opportunity to learn about distributed computing.

There are several different types of distributed computer which can be used for different purposes. There are super computers that are used for solving mathematical problems like modelling weather conditions or simulating chemical reactions. These systems often use the Message Passing Interface (MPI). A team at the University of Southampton built a 64 node MPI based super computer . This system is used for teaching students about supercomputing.

Another technology that"s often used in distributed computing is Hadoop, which distributes data across many nodes. Hadoop is often used for processing large datasets and data mining. An engineer at Nvidia built a small Hadoop cluster using Raspberry Pies . He uses his cluster to experiment and test ideas before deploying them on more powerful systems.

Using a Raspberry Pi cluster as a web server

Clusters can be used as web servers. Many web sites get too much traffic to run on a single server, so several servers have to be used. Requests from web browsers are received by a node called a load balancer, which forwards requests to worker servers. The load balancer then forwards responses from servers back to the clients.

This site is now hosted on a Raspberry Pi cluster. The worker nodes are standard web servers that contain identical content. I just installed Apache on them and copied my site to each node.

I use an extra Raspberry Pi to host a development copy of this site, and to control the cluster. This Pi is connected to my local network via wifi, so I can access the development copy of my site from my laptop.

The extra Pi also has an ethernet connection to the Pi cluster. When I want to update my site, I can transfer changes from the development site to the live site on the cluster. Site updates are put into .tar.gz files which the worker nodes automatically download from the development site. Once downloaded, updates are then unpacked into the local file system.

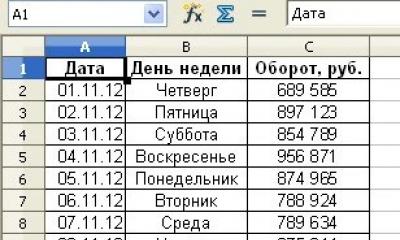

Configuring the Raspberry Pi servers

All of the Pies in this system are headless. I can log into the Pi with the development site using the Remote Desktop Protocol, and from that Pi I can log into the worker Pies using SSH.

All the Pies in the cluster use a static IP address. In a larger cluster it would probably be better to set up a DHCP server on the load balancer. The IP addresses used in the cluster are on the 192.168.1.xxx subnet.

For each worker Pi, I set up a 4GB SD card using the latest version of Raspbian. In raspi-config I set the following options:

- expand fs

- set the hostname

- set the password

- set memory split to 16MB for the GPU

- overclock the CPU to 800MHz

- enable ssh

On each card I installed Apache and some libraries required by my CMS, libxml2 and python-libxml2. I used this command to enable mod rewrite, which is also required by my CMS:

$ sudo a2enmod rewrite

Finally, I copied some scripts onto each SD card which allow each Pi to synchronize its contents with the development Pi. In a larger cluster it would be worth creating an SD card image with all of these modifications made in advance.

Building a load balancer

The load balancer must have two network interfaces, one to receive requests from a router, and another network interface to forward requests to the server cluster. The nodes in the cluster are a on a different subnet than the rest of the network, so the IP address of the load balancer"s second interface must be on the same subnet as the rest of the cluster. The load balancer"s first interface has IP address 192.168.0.3 while the second interface"s IP address is 192.168.1.1. All the Pies in the cluster have IP addresses on the 192.168.1.xxx subnet.

I built my load balancer using an old PC with 512MB of RAM and a 2.7GHz x86 CPU. I added a second PCI ethernet card and installed Lubuntu, a lightweight version of Ubuntu. I was going to install Ubuntu, but this PC is pretty old, so Lubuntu is probably a better choice. I used a PC becasue I wasn"t sure if a single Pi would be powerful enough to act as a load balancer, and a Pi only has one ethernet connection. I want both of my load balancer"s network connections to be ethernet for improved performance and stability.

Note that IP forwarding is not enabled. The load balancer isn"t a router, it should only forward HTTP requests and not every IP packet that it receives.

Setting up the load balancer software

There are many different software implementations of load balancing. I used Apache"s load balancer module because it"s easy to set up. First I made sure my PC"s OS was up to date:

sudo apt-get update

sudo apt-get upgrade

Then I installed Apache:

sudo apt-get install apache2

These Apache modules need to be enabled:

sudo a2enmod proxy

sudo a2enmod proxy_http

sudo a2enmod proxy_balancer

The next step is to edit /etc/apache2/sites-available/default in order to configure the load balancer. The proxy module is needed for HTTP forwarding, but it"s best not to allow your server to behave as a proxy. Spammers and hackers often use other people"s proxy servers to hide their IP address, so it"s important to disable this feature by adding this line:

ProxyRequests off

Although proxy requests are disabled, the proxy module is still enabled and and acts as a reverse proxy. Next, define the cluster and its members by adding this code:

Balancer manager interface

The balancer module has a web interface that makes it possible to monitor the status of the back end servers, and configure their settings. You can enable the web interface by adding this code to /etc/apache2/sites-available/default:

It"s also necessary to instruct Apache to handle requests to the /balancer-manager page locally instead of forwarding these requests to a worker server. All other requests are forwarded to the cluster defined above.

ProxyPass /balancer-manager ! ProxyPass / balancer://rpicluster/

Once these changes have been saved, Apache should be restarted with this command:

$ sudo /etc/init.d/apache2 restart

when I open a browser and go to http://192.168.0.3 I see the front page of my web site. If I go to http://192.168.0.3/balancer-manager, I see this page in the image on the right.

The last step in getting the cluster online is adjusting the port forwarding settings in my router. I just needed to set up a rule for forwarding HTTP packets to http://192.168.0.3.

Here"s the complete /etc/apache2/sites-available/default for the load balancer:

Используется энтузиастами для самых различных целей. Так, например энтузиаст Дэвид Гилл (David Guill) решил использовать его для постройки кластера - группы компьютеров, соединенных между собой и представляющих, с точки зрения пользователя, единый аппаратный ресурс. Проект решено было назвать 40-Node Raspi Cluster. Стоит заметить, что кластер Дэвиду был необходим для того, чтобы набраться опыта в программировании на распределенных системах, так что кластер из Raspberry Pi будет заменять собой, на время обучения, настоящий супер-компьютер.

По простому можно было бы собрать кластер из Raspberry Pi, используя вместо корпуса стелаж или недорогой шкаф (как в случаи с ), но поскольку Дэвид увлекается моддингом, то и кластер он решил сделать стильный, максимально приближенный по своему виду и удобству к серийным решениям. И, надо сказать, у Дэвида это получилось, ведь его проект намного более продуман, чем многие серийные корпуса. Кстати, сделан корпус проекта 40-Node Raspi Cluster из акриловых панелей, нарезанных по размеру с помощью лазера и склеенных вручную.

Основными отличительными особенностями проекта 40-Node Raspi Cluster стали: крутой внешний вид, достаточно компактный размер (как большая башня), удобный доступ ко всем компонентам и возможность их замены без необходимости разбирать корпус, безвинтовое крепление частей коруса и многих комплектующих, а также порядок в проводах (а их в данном проекте ой как не мало). Данный проект включает в себя 40 компактных компьютеров Raspberry Pi (40 ядер Broadcom BCM2835 с частотой 700 МГц, 20 ГБ распределенной оперативной памяти), два 24-портовых свитча, один блок питания форм-фактора ATX, пять жестких дисков емкостью по 1 ТБ (с возможностью расширения до 12 штук), 440 ГБ флеш-памяти, а также роутер с возможностью беспроводного подключения.

Компьютеры Raspberry Pi в данном проекте сгруппированы по четыре штуки на кастомных акриловых креплениях, которых в данном проекте десять штук. Благодаря такому креплению (как в блейд-серверах), обеспечивается удобный доступ и легкая замена компактных компьютеров. Для каждого блейда с Raspberry Pi предусмотрен свой компактный DC-DC преобразователь, питающийся от общего ATX блока питания. Охлаждение кластера реализовано с помощью четырех 140 мм вентиляторов, за которыми установлены фильтры.

Дополнительной «моддинговости» проекту 40-Node Raspi Cluster добавляет обилие светодиодов - их в проекте более трех сотен (на мини-компьютерах, свитчах, роутерах и вентиляторах), при этом в процессе работы они будут мигать в соответствии с нагрузкой. Размеры данного проекта составляют 25 х 39 х 55 см, а примерная стоимость постройки - 3000 долларов.

С внешним и внутренним видом, а также с особенностями, проекта 40-Node Raspi Cluster вы можете ознакомиться по прикрепленным фото и видео. Если же данный проект вас заинтересовал, то ознакомиться с ним детальней, а Дэвид описал постройку этого монстра очень детально, можно посетив соответствующую заметку на его личном сайте.